自己写个爬虫实现博客文章搜索功能

最终效果

一. 前言

对于自己的CSDN文章,由于只能工具标题关键字搜索,如果文章很多的话,记不清文章标题,这个时候搜索就会比较麻烦,那就自己做一个搜索功能。

二. 思路

把自己的CSDN文章都爬到本地

写个web服务器,负责执行搜索任务

写个静态html页面,负责输入搜索的关键字和展示搜索结果

三. 撸起袖子写代码

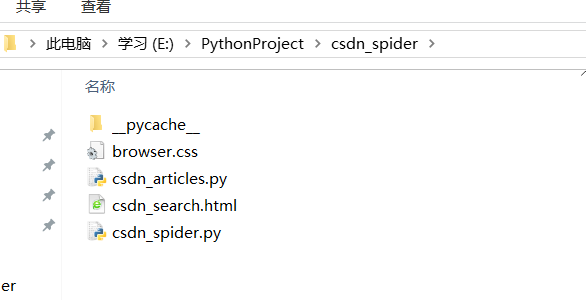

目录结构如下

1 python爬虫,爬自己的CSDN文章

python版本:3.8

需要安装库:requests、BeautifulSoup

csdn_spider.py

# csdn_spider.py

import requests

from bs4 import BeautifulSoup

import base64

import os

import re

import json

from threading import Thread

import time

# 总页数

PAGE_CNT = 16

BLOG_URL = 'https://blog.csdn.net/linxinfa/article/list/'

DOWNLOADD_DIR = 'articles/'

page_done_cnt = 0

article_cnt = 0

title2url = None

def get_all_aritcles(page):

global title2url

global page_done_cnt

global article_cnt

web_url = BLOG_URL + str(page)

r = requests.get(web_url)

html_txt = r.text

# 去除换行

html_txt = html_txt.replace('<br>', '').replace('<br/>', '')

soup = BeautifulSoup(html_txt, 'lxml')

tag_main =soup.find('main')

tag_div = tag_main.find('div', class_='article-list')

if tag_div:

tag_h4_all = tag_div.find_all('h4')

for tag_h4 in tag_h4_all:

tag_a = tag_h4.find('a')

if 'linxinfa' in tag_a['href']:

url = tag_a['href']

# 这里不能直接用tag_a.string取出文章标题,因为a节点中含有子节点,需要做extract处理

tag_a = [s.extract() for s in tag_a]

# 取出标题

title = tag_a[1]

# 去除前后空格

title = title.strip()

print('%s %s: %s'%(article_cnt, title, url))

encode_title = base64.b64encode(title.encode('utf-8'))

encode_title = str(encode_title, encoding="utf-8").replace('/','%')

download_article(encode_title, url)

title2url[encode_title] = url

article_cnt = article_cnt + 1

page_done_cnt = page_done_cnt + 1

def download_article(title, url):

r = requests.get(url)

# 只取article标签内的内容

match = re.search('<article class="baidu_pl">((.|\n)*)</article>', r.text)

if match:

content_txt = match.group(1)

# 文章标题使用base64编码

# 以base64编码后的标题作为文件名,保存文章内容到本地

fw = open(DOWNLOADD_DIR + title + '.html', 'w', encoding = 'utf-8')

fw.write('<article class="baidu_pl">' + content_txt + '</article>')

fw.close()

def create_download_dir():

if not os.path.exists(DOWNLOADD_DIR):

os.mkdir(DOWNLOADD_DIR)

def save_title2url_json():

global title2url

txt = json.dumps(title2url, indent=2)

fw = open('title2url.json', 'w')

fw.write(txt)

fw.close()

def search_article(search_txt):

global title2url

target_articles = []

if None == title2url:

fr = open('title2url.json', 'r')

title2url_txt = fr.read()

title2url = json.loads(title2url_txt)

for root, dirs, fs in os.walk(DOWNLOADD_DIR):

for f in fs:

fr = open(os.path.join(root, f), 'r', encoding='utf-8')

article_txt = fr.read()

fr.close()

if re.search(search_txt, article_txt):

k = f.replace('.html', '').replace('%','/')

if k in title2url:

target_articles.append({"title":str(base64.b64decode(k),"utf-8"), "url":title2url[k]})

return target_articles

if '__main__' == __name__:

title2url = {}

page_done_cnt = 0

article_cnt = 0

create_download_dir()

for i in range(1, PAGE_CNT + 1):

thread = Thread(target=get_all_aritcles, args=(i,))

thread.setDaemon(True)

thread.start()

while page_done_cnt < PAGE_CNT:

time.sleep(0.5)

save_title2url_json()

print("done")

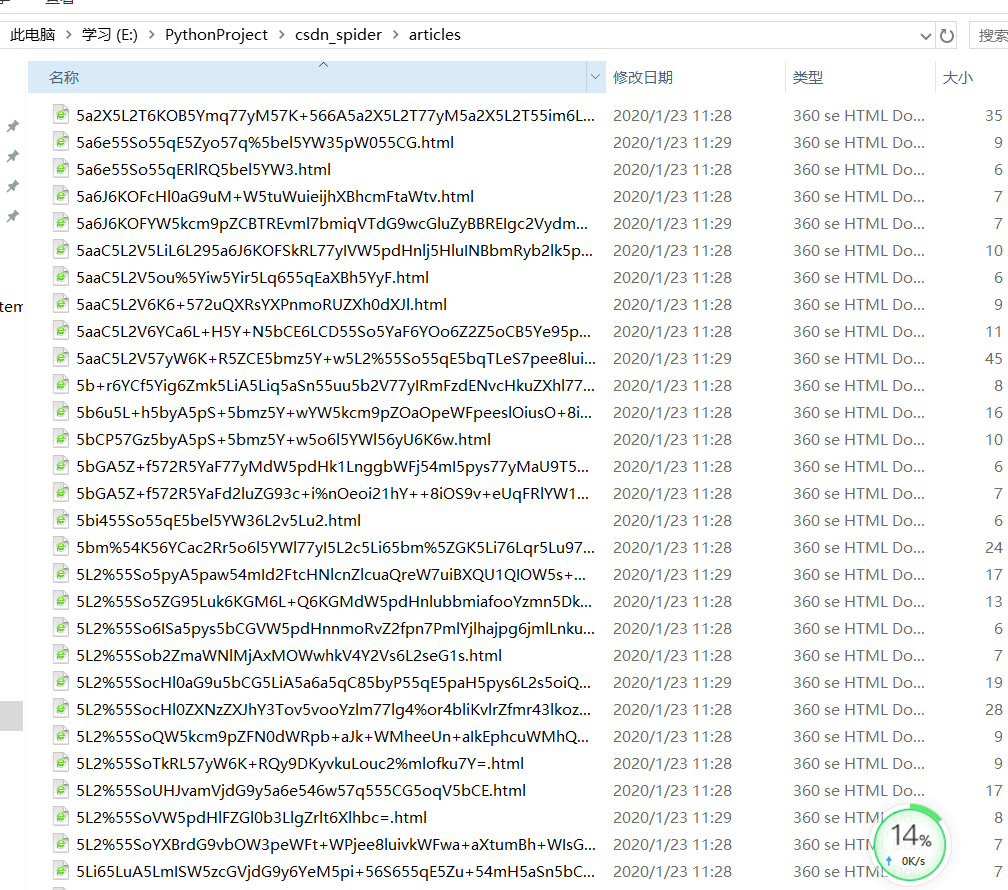

执行后,把文章爬到本地的articles目录中

注:文件名是文章标题的base64编码(其中把/替换成%,否则会提示非法文件名)

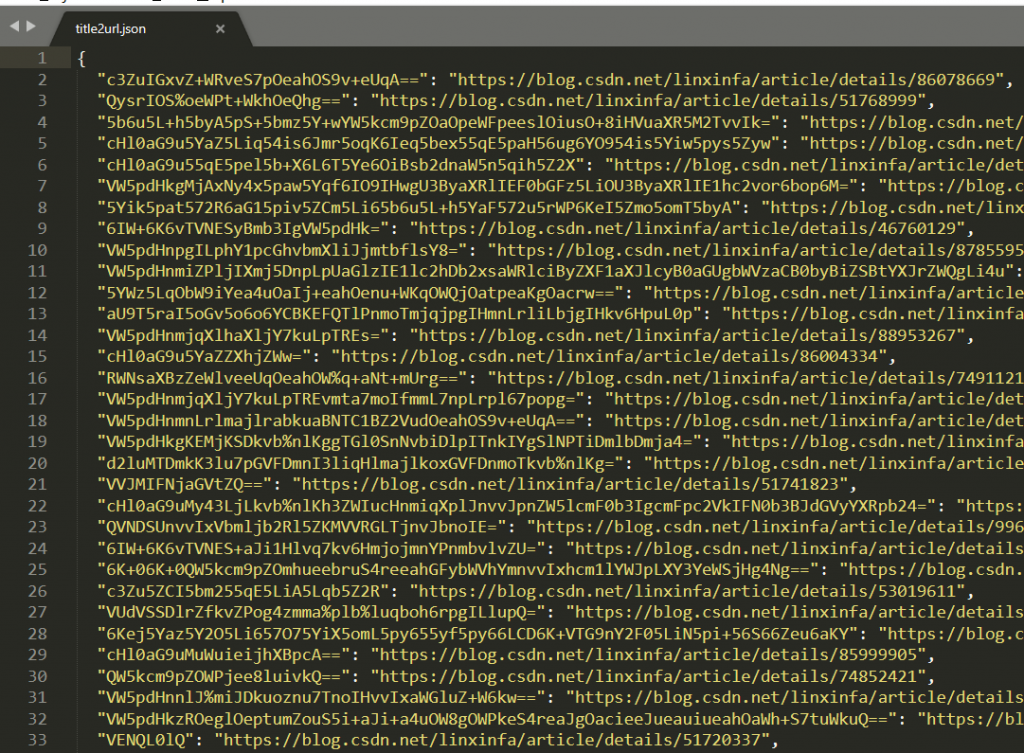

然后还会生成一份标题到链接的映射表

2. web服务器

使用python的tornado框架,负责执行搜索任务

需要安装库:tornado

csdn_articles.py

# csdn_articles.py

import tornado.ioloop

import tornado.web

import json

import os

import re

import platform

import csdn_spider

PORT = 8987

class BaseHandler(tornado.web.RequestHandler):

def set_default_headers(self):

self.set_header("Access-Control-Allow-Origin", "*") # 这个地方可以写域名

self.set_header("Access-Control-Allow-Headers", "x-requested-with")

self.set_header('Access-Control-Allow-Methods', 'POST, GET, OPTIONS')

def post(self):

self.write('some post')

def get(self):

self.write('some get')

def options(self):

# no body

self.set_status(204)

self.finish()

class SearchHandler(BaseHandler):

def post(self):

jd = get_json_from_bytes(self.request.body)

print('SearchHandler.post', jd)

if None == jd:

self.write('data error')

else:

txt = jd['txt']

target_articles = csdn_spider.search_article(txt)

print(target_articles)

self.write(json.dumps(target_articles))

def get_json_from_bytes(data_bytes):

txt = str(data_bytes, encoding = "utf8")

if is_json(txt):

return json.loads(txt)

else:

print('get_json_from_bytes Error:', txt)

return None

def is_json(txt):

try:

json.loads(txt)

except ValueError:

return False

return True

def make_app():

return tornado.web.Application([

(r'/search_article', SearchHandler),

])

if __name__ == "__main__":

# 解决python3.8问题

if platform.system() == "Windows":

import asyncio

asyncio.set_event_loop_policy(asyncio.WindowsSelectorEventLoopPolicy())

app = make_app()

app.listen(PORT)

print('start web server successfully, port:', PORT)

tornado.ioloop.IOLoop.current().start()

3. 静态网页

负责输入搜索的关键字和展示搜索结果

browser.html

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8">

<title>林新发CSDN博客搜索</title>

<link href="browser.css" type="text/css" rel="stylesheet" />

</head>

<body>

<h1>林新发CSDN博客搜索</h1>

请输入关键字 <input id="txt" type="text" name="version" value="">

<button onclick="OnSearchBtn()">搜索</button>

<table cellspacing="1" width="100%">

<thead>

<tr>

<th width="10%">index</th>

<th width="50%">文章</th>

</tr>

</thead>

<tbody id='article_table'>

</tbody>

</table>

</html>

<script>

var ADDRESS = 'http://127.0.0.1:8987/';

function isJSON(str) {

if (typeof str == 'string') {

try {

JSON.parse(str);

return true;

} catch(e) {

return false;

}

}

console.log('It is not a string!')

}

function OnSearchBtn() {

var txt = document.getElementById('txt');

var version = txt.value;

data = { 'txt': txt.value };

var request_url = ADDRESS + 'search_article';

var request = new XMLHttpRequest();

request.open('POST', request_url, true);

request.send(JSON.stringify(data));

request.onload = function(e) {

console.log("请求成功");

console.log(request.response);

if (request.status === 200) {

console.log(request.responseText);

var tbody = document.querySelector('tbody');

var childs = tbody.childNodes;

for(var i = childs .length - 1; i >= 0; i--)

{

tbody.removeChild(childs[i]);

}

if(!isJSON(request.response))

{

return;

}

jd = JSON.parse(request.response);

for (var i = 0; i < jd.length; i++) {

//创建行tr

var tr = document.createElement('tr');

//将新创建的行tr添加给tbody

tbody.appendChild(tr);

var td = document.createElement('td');

td.innerHTML = i + 1;

//给tr添加td子元素

tr.appendChild(td);

var td = document.createElement('td');

var a = document.createElement('a');

a.innerHTML = jd[i]['title'];

a.href = jd[i]['url'];

a.target = 'blank';

td.appendChild(a);

tr.appendChild(td);

}

}

else{

console.log('search_article error, request.status: ' + request.status);

}

}

request.onerror = function(e) {

console.log('search_article error, status:' + request.status + ', statusText: ' + request.statusText);

}

}

</script>

browser.css

table {

border-collapse: collapse;

text-align: center;

}

td,

th {

border: 1px solid #333;

text-align: left;

}

thead tr {

background-color: #004B97;

color: white;

}

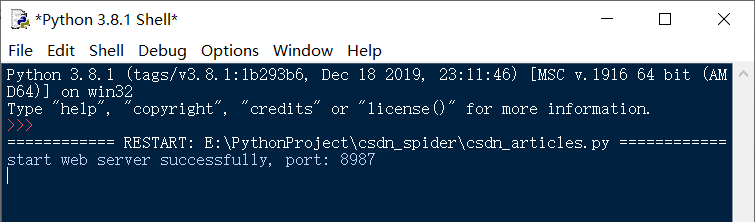

四. 启动web服务器,测试

执行csdn_articles.py,启动web服务器

用浏览器打开broswer.html

搜索Unity,就可以查到含有Unity关键字的博客文章了(文章标题或内容还要Unity关键字都会被搜出来)

————————————————

转自 林新发CSDN博客

原文链接:https://blog.csdn.net/linxinfa/article/details/104075112

自己写个爬虫实现博客文章搜索功能 https://www.gzza.com/1214.html

本网站资源来自互联网收集,仅供用于学习和交流,请勿用于商业用途。原创内容除特殊说明外,转载本站文章请注明出处。

如有侵权、不妥之处,联系删除。 Email:master@gzza.com

本网站资源来自互联网收集,仅供用于学习和交流,请勿用于商业用途。原创内容除特殊说明外,转载本站文章请注明出处。

如有侵权、不妥之处,联系删除。 Email:master@gzza.com

THE END